MLOps World 2022: Difference between revisions

No edit summary |

|||

| (18 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

__TOC__ | |||

== Vendor Overview == | |||

There were two large groups of vendors. | |||

First session | * Vendors selling ML pipelines or integrated ML Ops platforms | ||

* Vendor selling model monitoring systems aka observability. | |||

== Cloud Representation == | |||

This conference was heavily AWS based. AWS was the most represented of the big three. They had their own booth. I saw no Azure representation ( but some Microsoft Research folk). | |||

I also see very little Google representation, one talk. IBM was also present. Most vendors had AWS solutions, or were hosted on AWS. Azure and GCP compatibility and offerings were scarce. | |||

== Sessions == | |||

* June 7th 10am | |||

First session Best practice | |||

Lina | Lina | ||

| Line 11: | Line 27: | ||

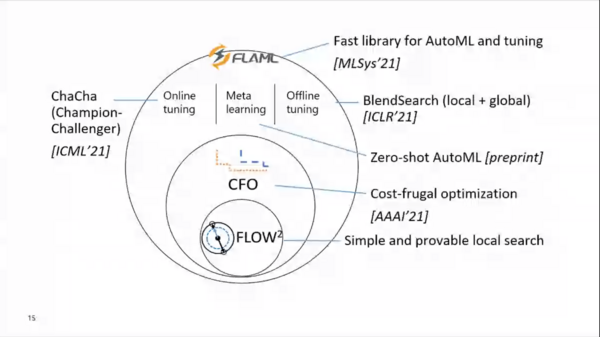

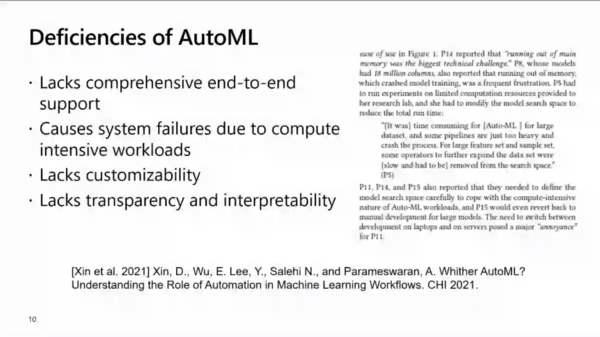

== Automated Machine Learning & Tuning with FLAML == | === Automated Machine Learning & Tuning with FLAML === | ||

11 am - 3h session. | |||

TL;DR: '''FANL is a package that will choose an algo for you, and tune the hyper parameters for you. !! Wow that's a big claim.''' | |||

[[image:faml-agenda.png|600px]] | |||

Manually tuning hyper parameters is a large effort. | |||

Costs a lot, tuning opportunity lost due to load. | |||

Also cost is not one time. | |||

The goal is to remove the hassle of tuning. | |||

https://microsoft.github.io/FLAML/ | |||

[[image:the_field_of_AutoML.png|600px]] | |||

[[image:benifits_of_AUTOML.png|600px]] | |||

[[image:deficiencies.png|600px]] | |||

=== Taking MLOps 0-60: How to Version Control, Unify Data and Management === | |||

I need to lab this out. | |||

https://labelstud.io/ | |||

https://pachyderm.io/ | |||

also: | |||

https://www.pachyderm.com/ | |||

github.com/pachyderm/examples/tree/master/label-studio | |||

=== MLOps at Scale === | |||

Shelbee aws person | |||

Cross org size | |||

Cross Industy | |||

People process technology | |||

Govern workloads | |||

Lesson 1 : start with process. | |||

What matter are the problem that grt in the way of deployment. | |||

The art of the possible sessions | |||

Outline performance expectation | |||

"If you need 100% accuracy ML is not for you" | |||

Q: executive sponsorship | |||

Ml strategy must have data strategy | |||

Need day to day access to data | |||

Must have a data catalog | |||

When you talk about pii and thousands of staff accessing it. You talk about liability to the model and such. But there is liability to the business. | |||

Cost control. Iac , as code , | |||

Support sesff to choose correct correct jsnrance sizes. | |||

Control , communication about budget and spending. | |||

Have automated process for deployment. | |||

Missing: approvals. | |||

Isn't it "just a another software deploy" | |||

Retraining plan in plan at the beginning | |||

Model monitoring | |||

Business performance | |||

Technical performance | |||

Lesson 2 you can scale with people issues. | |||

You didn't mention security people. | |||

Lots of data scientist but not data engineers and ml engineers. | |||

"Jobs that need to be done" how about "roles and responsibilities". | |||

Full stack unicorn : is a liability | |||

Sick days | |||

Technical know how | |||

Business knowledge | |||

Quality of work | |||

Keeping current | |||

Mental health | |||

Have common goals do depth are not at odd with each other. | |||

Feature store , model registry . | |||

Lesson 3 know you tradeoffs | |||

Core to Business? | |||

Effort | |||

Lesson 4 can't just use software dev process for ml. | |||

Experime Tatiana is key . Most dev cycles don't have space for that. | |||

Not every experiment should go to a pipeline | |||

But like pipelines can have sraged releases, some fast than others and you can do local test and have pipelines. | |||

With long train times you can train with every pipeline run. | |||

You need model lineage. Where did the model came ? | |||

Lesson 5 start small and iterate | |||

Think maturity model | |||

‐---------- | |||

Arize | |||

Fraud not fraud | |||

Failed not failed | |||

Feature contribution | |||

Analysis models. | |||

What is the contribution. | |||

Explainability | |||

Ground truths , fast actuals | |||

Drift is proxy to performance. | |||

Data drift, co variant drift, related to | |||

Feature drift. | |||

Time based drift, environment changed. | |||

Anomaly detect, versus drift... when a cohort has changed. Between two whole distributions. | |||

Psi pop stable index. | |||

Use drift investigation to track back to the anomaly. | |||

Google paper 'dataflow" | |||

Cat boost : titanic survival model | |||

--- | |||

Feature engineering | |||

Look for missing values | |||

Measure of central tendency | |||

Measures if percentile , why not candle sticks | |||

Biasness detection | |||

Pandas report | |||

Iv information value association value | |||

Ig information gain association value | |||

Data stability | |||

Typically ad hic... most modelers have no domain knowledge. | |||

The weak Ness of auto mstic Feature Gen is that you don't know where it came from. You don't know I'd it's just you data set. You don't know about drift. | |||

Feature recoomnedn sankey visualization | |||

Connecting attributes to features. | |||

Checks | |||

Feature stability v attribute stability | |||

Encoding . Woe weight of evidence | |||

Attributes are what go into the eda , the raw data,. Features are what you decide to treat as inputs to models. Either straight features or synthetic, generated. | |||

Optio s: | |||

Make added with features that don't drift. The model is more reliable and can be used from longer before Retraining. | |||

Or you find a very predictive feature that has drift and understand you will need to make a new model soon. | |||

== Link Dump == | |||

https://github.com/microsoft/DeepSpeed | |||

https://github.com/linkedin/greykite | |||

https://www.youtube.com/channel/UCFCbqKIQ-8mca0zmMVziAfg | |||

[[category:conference]] | |||

Latest revision as of 13:54, 21 June 2022

Vendor Overview

There were two large groups of vendors.

- Vendors selling ML pipelines or integrated ML Ops platforms

- Vendor selling model monitoring systems aka observability.

Cloud Representation

This conference was heavily AWS based. AWS was the most represented of the big three. They had their own booth. I saw no Azure representation ( but some Microsoft Research folk).

I also see very little Google representation, one talk. IBM was also present. Most vendors had AWS solutions, or were hosted on AWS. Azure and GCP compatibility and offerings were scarce.

Sessions

- June 7th 10am

First session Best practice

Lina

not very usful to me. joined late doue to hova + hopin tehcnical difficulties.

Automated Machine Learning & Tuning with FLAML

11 am - 3h session.

TL;DR: FANL is a package that will choose an algo for you, and tune the hyper parameters for you. !! Wow that's a big claim.

Manually tuning hyper parameters is a large effort.

Costs a lot, tuning opportunity lost due to load.

Also cost is not one time.

The goal is to remove the hassle of tuning.

https://microsoft.github.io/FLAML/

Taking MLOps 0-60: How to Version Control, Unify Data and Management

I need to lab this out.

https://pachyderm.io/ also: https://www.pachyderm.com/

github.com/pachyderm/examples/tree/master/label-studio

MLOps at Scale

Shelbee aws person

Cross org size Cross Industy

People process technology

Govern workloads

Lesson 1 : start with process.

What matter are the problem that grt in the way of deployment.

The art of the possible sessions

Outline performance expectation

"If you need 100% accuracy ML is not for you"

Q: executive sponsorship

Ml strategy must have data strategy

Need day to day access to data

Must have a data catalog

When you talk about pii and thousands of staff accessing it. You talk about liability to the model and such. But there is liability to the business.

Cost control. Iac , as code ,

Support sesff to choose correct correct jsnrance sizes.

Control , communication about budget and spending.

Have automated process for deployment.

Missing: approvals.

Isn't it "just a another software deploy"

Retraining plan in plan at the beginning

Model monitoring

Business performance

Technical performance

Lesson 2 you can scale with people issues.

You didn't mention security people.

Lots of data scientist but not data engineers and ml engineers.

"Jobs that need to be done" how about "roles and responsibilities".

Full stack unicorn : is a liability

Sick days Technical know how Business knowledge Quality of work Keeping current Mental health

Have common goals do depth are not at odd with each other.

Feature store , model registry .

Lesson 3 know you tradeoffs

Core to Business?

Effort

Lesson 4 can't just use software dev process for ml.

Experime Tatiana is key . Most dev cycles don't have space for that.

Not every experiment should go to a pipeline

But like pipelines can have sraged releases, some fast than others and you can do local test and have pipelines.

With long train times you can train with every pipeline run.

You need model lineage. Where did the model came ?

Lesson 5 start small and iterate

Think maturity model

‐---------- Arize

Fraud not fraud

Failed not failed

Feature contribution

Analysis models.

What is the contribution. Explainability

Ground truths , fast actuals Drift is proxy to performance.

Data drift, co variant drift, related to

Feature drift.

Time based drift, environment changed.

Anomaly detect, versus drift... when a cohort has changed. Between two whole distributions.

Psi pop stable index.

Use drift investigation to track back to the anomaly.

Google paper 'dataflow"

Cat boost : titanic survival model

---

Feature engineering

Look for missing values Measure of central tendency

Measures if percentile , why not candle sticks

Biasness detection

Pandas report

Iv information value association value

Ig information gain association value

Data stability

Typically ad hic... most modelers have no domain knowledge.

The weak Ness of auto mstic Feature Gen is that you don't know where it came from. You don't know I'd it's just you data set. You don't know about drift.

Feature recoomnedn sankey visualization

Connecting attributes to features.

Checks

Feature stability v attribute stability

Encoding . Woe weight of evidence

Attributes are what go into the eda , the raw data,. Features are what you decide to treat as inputs to models. Either straight features or synthetic, generated.

Optio s:

Make added with features that don't drift. The model is more reliable and can be used from longer before Retraining.

Or you find a very predictive feature that has drift and understand you will need to make a new model soon.

Link Dump

https://github.com/microsoft/DeepSpeed